Nota Bene: Adam Smith turns his attention to the role of government, law, and public finance in the fifth and final section of The Wealth of Nations (available here). In brief, Book V contains three chapters and is structured as follows: Chapter 1 surveys the essential duties that government must perform and thus the expenses it must incur in order to carry out these duties, while Chapters 2 and 3 survey the main methods a government can use to finance these expenses: taxes and public debt. Chapter 1, in turn, is divided into three separate parts, with each part corresponding to the one of the three main duties of government: national defense (part 1), the administration of justice (part 2), and public works (part 3). Today, I will discuss Smith’s thoughts on national defense (WN, V.i.a).

Are standing armies dangerous to liberty, as Alexander Hamilton warned, or do standing armies somehow end up promoting liberty? Adam Smith answers this key question in Part 1 of Chapter 1 of Book V of The Wealth of Nations as follows:

- The noblest of all arts. First off, Smith says that national defense is not only the “first duty” of government (V.i.a.1); it is also “the noblest of all arts.” (V.i.a.14) Why the noblest? Because a strong and well-trained military protects people who are incapable of protecting themselves: “An industrious, and upon that account a wealthy nation, is of all nations the most likely to be attacked; and unless the state takes some new measures for the public defence, the natural habits of the people render them altogether incapable of defending themselves.” (V.i.a.15)

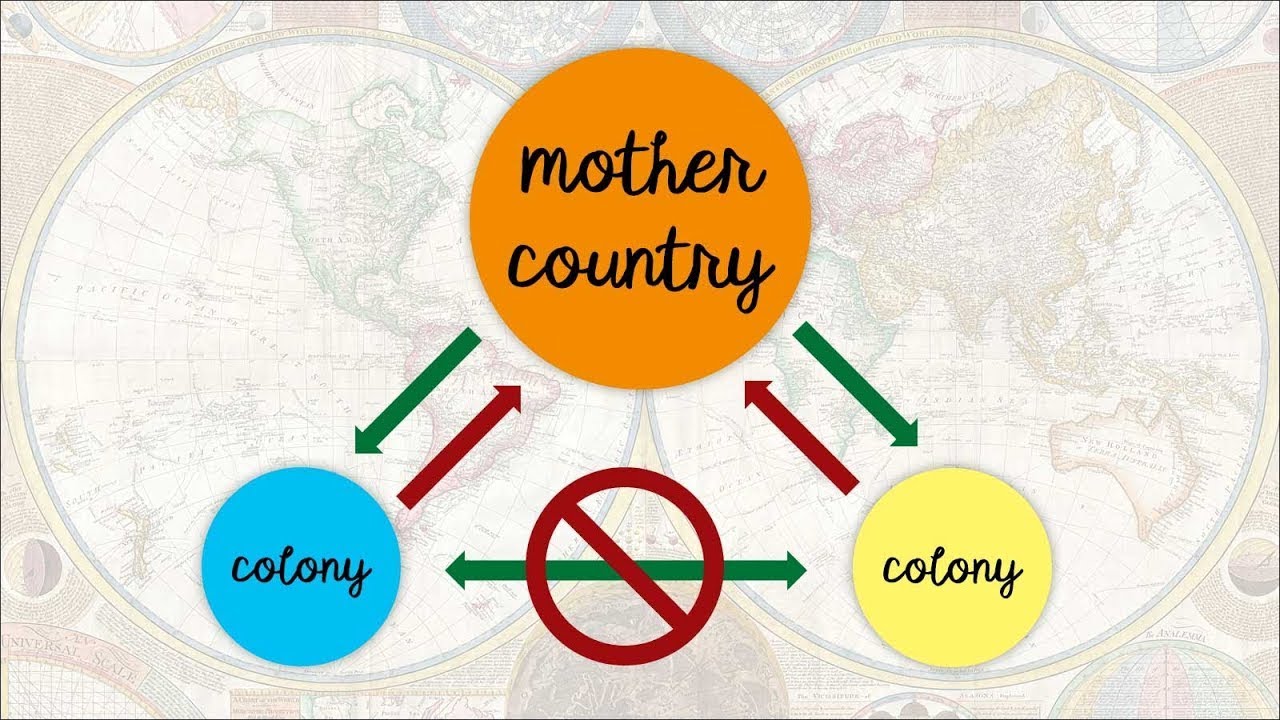

- Standing armies > citizen militias. Although Smith is a champion of natural liberty, in this chapter he gives three irrefutable reasons why professional and permanent standing armies are hands-down “superior” over amateur and part-time citizen militias: training, reaction time, and the division of labor. (See especially WN, V.i.a.28-40.) After all, when the bullets start flying, who is more likely to stand their ground and fight: well-trained soldiers who are used to following the orders of a central command, or a bunch of rank amateurs under dispersed and local commanders? Also, in times of war or invasion, time itself is of the essence, and a standing army is ready and prepared to react to any emergency; a militia, by contrast, must be assembled and trained before it can join the field of battle.

- Standing armies and liberty. But Smith’s most original and surprising observation appears in Paragraph 41 of Part 1 of Chapter 1 of Book V. Here, the Scottish philosopher turns the main argument against a standing army — that it will be used by the government to quash dissent at home — on its head(!):

“Men of republican principles have been jealous of a standing army as dangerous to liberty. It certainly is so wherever the interest of the general and that of the principal officers are not necessarily connected with the support of the constitution of the state. The standing army of Caesar destroyed the Roman republic. The standing army of Cromwell turned the Long Parliament out of doors. But where the sovereign is himself the general, and the principal nobility and gentry of the country the chief officers of the army, where the military force is placed under the command of those who have the greatest interest in the support of the civil authority, because they have themselves the greatest share of that authority, a standing army can never be dangerous to liberty. On the contrary, it may in some cases be favourable to liberty. The security which it gives to the sovereign renders unnecessary that troublesome jealousy, which, in some modern republics, seems to watch over the minutest actions, and to be at all times ready to disturb the peace of every citizen. Where the security of the magistrate, though supported by the principal people of the country, is endangered by every popular discontent; where a small tumult is capable of bringing about in a few hours a great revolution, the whole authority of government must be employed to suppress and punish every murmur and complaint against it. To a sovereign, on the contrary, who feels himself supported, not only by the natural aristocracy of the country, but by a well-regulated standing army, the rudest, the most groundless, and the most licentious remonstrances can give little disturbance. He can safely pardon or neglect them, and his consciousness of his own superiority naturally disposes him to do so. That degree of liberty which approaches to licentiousness can be tolerated only in countries where the sovereign is secured by a well-regulated standing army….” (WN, V.i.a.41; my emphasis)

Notice, however, how Smith qualifies his libertarian defense of standing armies with the words “in some cases.” So, how can we distinguish between those standing armies that expand the circle of liberty at home and those that reduce it? Between, for example, the armies of the two Koreas? Regardless, we will proceed to Part 2 of Chapter 1 of Book V in my next post.